And it does not seem to be declining, not at least soon. A report says the market of Big Data Analytics is valued at $37.34 billion as of 2018, and it is growing at a 12.3% CAGR and will reach $105.08 billion by 2027 from 2019-2027. Today’s business world is focused more on customers with personalized services and fruitful interactions. Hadoop has the power to solve the complex challenges that businesses face and can overcome the weaknesses of traditional approaches; hence, the higher adoption. This is why learning these skills could transform your career and help you land that dream job you secretly pray for! But are you familiar with Big Data and Hadoop and how they benefit businesses? Don’t worry if your answer is no. Because in this article, we will first understand the concepts of Big Data & Hadoop and then explore some of the good resources where you can learn these skills. Let’s start!

Apache Hadoop and Big Data: What are they?

Big Data

Big data refers to a collection of complex and large data sets, which is difficult to process and store using traditional methods or database management. It is a vast subject that involves various frameworks, techniques, and tools. Big data constitutes data that different applications and devices produce, such as Black box, transport, search engine, stock exchange, power grid, social media, and the list goes on. The different processes included in Big Data are capturing, storing, curating, sharing, searching, transferring, visualizing, and analyzing data. There are three formats of Big data: Structured data, unstructured data, and semi-structured data. The benefits of Big Data are:

Boosts organizational efficiency while cutting down extra expenses Helps you tailor your offerings based on the needs, demands, beliefs, and shopping preferences of customers for better sales and branding Ensure that the right employees are hired Results in better decision-making Fuels innovation with deeper insights Improvement in healthcare, educational, and other sectors Pricing optimization for your product and services

Apache Hadoop

Apache Hadoop is an open-source software framework that organizations utilize to store data in a large amount and perform computation. The basis of this framework is Java, along with certain native codes in C and shell scripts. The Apache Software Foundation developed Hadoop in 2006. It is basically a tool to process big data and make it more meaningful to generate more revenue and reap other benefits. It implies that the ecosystem of Hadoop has the capability to solve Big Data, and this is how they are related, in case you are wondering. The different components of the Hadoop ecosystem are TEZ, Storm, Mahout, MapReduce, etc. Hadoop is affordable yet highly scalable, flexible, and includes fault tolerance in its prized feature list. This is why its adoption is growing rapidly. The benefits of Hadoop are:

The capability of storing and processing huge amounts of data in a distributed way Faster and high computing power Great fault tolerance, as data processing is protected from hardware failure. Even if a node fails, the job is redirected automatically to other nodes, ensuring the computing never fails. It allows you to scale your system easily to tackle more data by adding more nodes. The flexibility to store any amount of data and then use it however you want As Hadoop is a free, open-source framework, you save a lot of money compare to an enterprise solution.

How are enterprises adopting Big Data and Hadoop?

Hadoop and Big Data have great market prospects across different industry verticals. In this digital age, billions and trillions of data are being produced with emerging technologies. And these technologies are efficient to store this massive data and process it so enterprises can grow even more. From e-commerce, media, telecom, and banking to healthcare, government, and transportation, industries have benefited from data analytics; hence, Hadoop and Big Data’s adoption is skyrocketing. But how? Look at some of the industries and how they implement Big Data.

Media, communication, and entertainment: Businesses use Hadoop and Big Data Analytics to analyze customer behavior. They use the analysis to serve their customers accordingly and tailor content based on their target audience. Education: businesses in the education sector use the technologies to track student behavior and their progress over time. They also use it to track instructors’ or teachers’ performance based on the subject matter, student count, and their progress, etc. Healthcare: Institutions use public health insights and visualize to track disease spreading and work on active measures sooner. Banking: Big banks, retail traders, and fund management firms leverage Hadoop for sentiment measurement, pre-trade analytics, predictive analytics, social analytics, audit trails, etc.

Career opportunities in Hadoop and Big data

According to the US Bureau of Labor Statistics, mathematician and statistician roles, including data scientist jobs, will experience 36 percent growth between 2021 and 2031. Some of the lucrative skills that are high in demand are Apache Hadoop, Apache Spark, data mining, machine learning, MATLAB, SAS, R, data visualization, and General-purpose programming. You can pursue job profiles like:

Data Analyst Data Scientist Big Data Architect Data Engineer Hadoop Admin Hadoop Developer Software Engineer

IBM also predicts that professionals with Apache Hadoop skills can get an average salary of around $113,258. Seems like motivation? Let’s start exploring some of the good resources from where you can learn Big Data and Hadoop and guide your professional path in a successful direction.

Big Data Architect

Big Data Architect Masters Program by Edureka helps you become proficient in the systems and tools that experts in Big Data use. This master’s program covers training on Apache Hadoop, Spark stack, Apache Kafka, Talend, and Cassandra. This is an extensive program, including 9 courses and 200+ interactive learning hours. They have designed the curriculum by thorough research on over 5,000 global job descriptions. Here, you will learn skills like YARN, Pig, Hive, MapReduce, HBase, Spark Streaming, Scala, RDD, Spark SQL, MLlib, and other 5 skills. You have multiple options to take up the course as per your convenience, like morning, evening, weekend, or weekdays. They also give you the flexibility to switch classes with another batch, and upon completion, you get an elegant certificate. They provide you with lifetime access to all the course content, including installation guides, quizzes, and presentations.

Hadoop Basic

Learn Big data and Hadoop fundamentals from Whizlabs to develop your skills and grab exciting opportunities. The course covers topics like introduction to Big Data, data analysis & streaming, Hadoop on the cloud, data models, Hadoop installation demo, Python demo, Hadoop and GCP demo, and Python with Hadoop demo. This course contains 3+ hours of videos divided into 8 lectures covering topics, as explained above. They provide you with unlimited access to the course content across different devices, including Mac, PC, Android, and iOS, on top of great customer support. To start this course, you must have prior, deep knowledge of multiple programming languages based on their role. Once you complete the program and watch 100% videos, they will issue a signed course certificate for you.

For Beginners

Udemy got Big Data & Hadoop for Beginners course to learn the basics of Big Data and Hadoop along with HDFS, Hive, Pig, and MapReduce by designing pipelines. They will also teach you technology trends, the Big Data market, salary trends, and various job roles in this field. You will understand Hadoop, how it works, its complex architectures, components, and installation on your system. The course covers how you can use Pig, Hive, and MapReduce to analyze massive data sets. They also provide demos for Hive queries, Pig queries, and HDFS commands in addition to their sample scripts and data sets. In this course, you will learn how to write codes on your own in Pig and Hive to process large amounts of data and design data pipelines. They also teach modern data architecture or Data Lake and help you practice using Big Data sets. To start the course, you need basic SQL knowledge, and if you know RDBMS, it’s even better.

Specialization

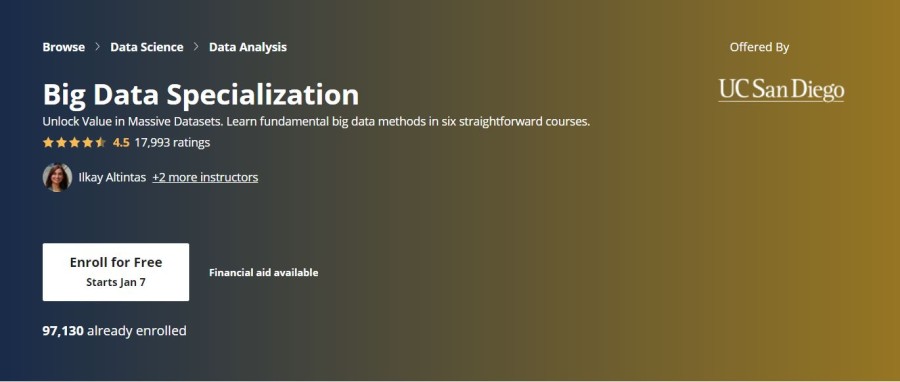

Take up Big Data Specialization from Coursera to learn Big Data’s fundamental methods offered by the University of California, San Diego (UCSanDiego) in 6 simple courses. And the best thing – you can enroll in it for free. In this course, you can acquire skills such as Neo4j, Apache Hadoop, Apache Spark, MongoDB, MapReduce, Cloudera, Data Model, data management, Splunk, data modeling, and machine learning basics, apart from Big Data. The specialization will help you make improved business decisions by understanding how to organize Big Data, analyze, and interpret it. With its help, you will be capable of applying your insights into real-world issues and questions. It includes a hands-on project that you would need to finish to complete the specialization successfully and earn the certification that is shareable with your prospective employers and a professional network. The specialization requires around 8 months for completion and includes a flexible schedule. You don’t need any prior knowledge or experience to get started with the course. The lecture’s subtitles are available in 15 languages such as English, Hindi, Arabic, Russian, Spanish, Chinese, Korean, and more.

Hadoop Framework

Similar to the above, this course – UCSanDiego offers Hadoop Platform & Application Framework by Coursera. It is for newbie professionals or programmers who want to understand the essential tools needed to collect and analyze data in large chunks. Even with no prior experience, you can walk through Apache Hadoop and Spark’s frameworks with hands-on examples. They will teach you the basic processes and components of the Hadoop software stack, architecture, and execution process. The instructor will also give assignments to guide you on how data scientists apply important techniques and concepts like MapReduce to solve Big Data issues. At the end of the course, you will gain skills like Python, Apache Hadoop and Spark, and MapReduce. The course is 100% online, takes around 26 hours for completion, includes a shareable certificate and flexible deadlines, and video subtitles are available in 12 languages.

Mastering Hadoop

This book will help you grasp the newly introduced capabilities and features of Hadoop 3, crunch & process data through YARN, MapReduce, and other relevant tools. It will also help you sharpen your skills on Hadoop 3 and utilize the learnings in the real-world case scenarios and codes. It will guide you the way Hadoop works in its core, and you will study sophisticated concepts of multiple tools, understand how you can protect your cluster, and discover solutions. With this guide, you can address typical issues, including how to use Kafka efficiently, reliability of message delivery systems, design low latency, and handle huge data volumes. At the end of the book, you can gain deep insights on distributed computing with Hadoop 3, build enterprise-level apps using Flick, Spark, and more, develop high-performance and scalable Hadoop data pipelines.

Learning Hadoop

LinkedIn is an excellent place to grow your professional network and enhance your knowledge and skills. This 4-hour long course covers an introduction to Hadoop, the essential file systems with Hadoop, MapReduce, the processing engine, programming tools, and Hadoop libraries. You will learn how you can set up its development environment, optimize and run MapReduce jobs, build workflows for scheduling jobs, and basic code queries with Pig and Hive. Apart from that, you will learn about available Spark libraries you can use with Hadoop clusters, in addition to the various options to run ML jobs on top of a Hadoop cluster. With this LinkedIn course, you can acquire Hadoop administration, database administration, database development, and MapReduce. LinkedIn provides you with a shareable certificate that you can showcase on your LinkedIn profile upon completing the course. You can also download it and share it with potential employers.

Fundamentals

Learn Big Data Fundamentals from edX to understand how this technology is driving change in organizations and important techniques and tools such as PageRank algorithms and data mining. This course is brought to you by the University of Adelaide, and over 41k people have already enrolled in it. It comes under the MicroMasters Program, and its length is 10 weeks with 8-10 hours of effort every week. And the course is FREE. However, if you want to get a certificate upon completion, you need to pay around $199 for it. It requires intermediate-level knowledge of the subject matter and is self-paced according to your convenience. If you want to pursue a MicroMasters program in Big data, they advise you to complete Computation Thinking & Big Data and Programming for Data Science before you take up this course. They will teach you the importance of Big data, the challenges companies face while analyzing large data, and how Big Data solves the issue. Towards the end, you will understand various Big Data applications in research and industries.

Data Engineer

The Data Engineering course by Udacity opens up new opportunities for your career in data science. This course’s estimated duration is 5 months, with 5-10 hours of effort every week. They require you to have an intermediate level of understanding of SQL and Python. In this course, you will learn how to build a Data Lake and data warehouse, data models with Cassandra and PostgreSQL, work with huge datasets using Spark, and data pipeline automation utilizing Apache Airflow. Towards the end of this course, you would utilize your skills by successfully finishing a capstone project.

YouTube

Edureka provides the Big Data & Hadoop full-video course on YouTube. How cool is that? You can access it anytime, anywhere, and without any cost involved. This full-course video helps you to learn and understand these concepts in detail. The course is great for both newbies and experienced professionals wanting to master their skills in Hadoop. The video covers Big Data introduction, associated issues, use cases, Big Data Analytics, and its stages and types. Next, it explains Apache Hadoop and its architecture; HDFS and its replication, data blocks, read/write mechanism; DataNode and NameNode, checkpointing, and secondary NameNode. You will then learn about MapReduce, job workflow, its word-count program, YARN, and its architecture. It also explains Sqoop, Flume, Pig, Hive, HBase, code sections, distributed cache, and more. In the last hour of the video, you will learn things about Big Data Engineers, their skills, responsibilities, learning path, and how to become one. The video ends with some interview questions that might help you crack the real-time interviews.

Conclusion

The future of data science seems to be bright, and so makes a career based on it. Big Data and Hadoop are two of the most utilized technologies in organizations across the globe. And hence, the demand is high for jobs in these fields. If it interests you, take up a course in any of the resources I just mentioned and prepare to land a lucrative job. All the best! 👍

![]()